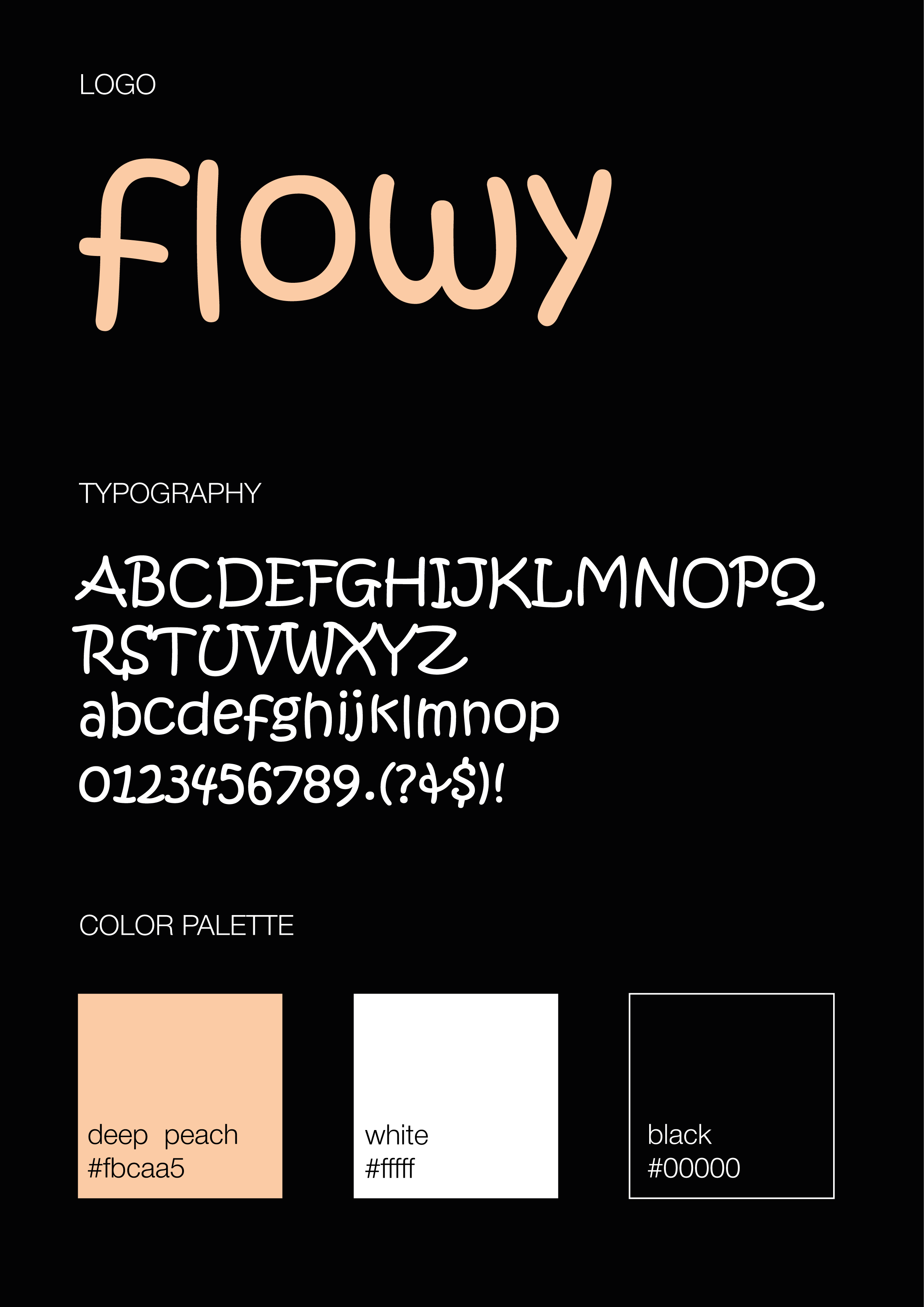

Abstract

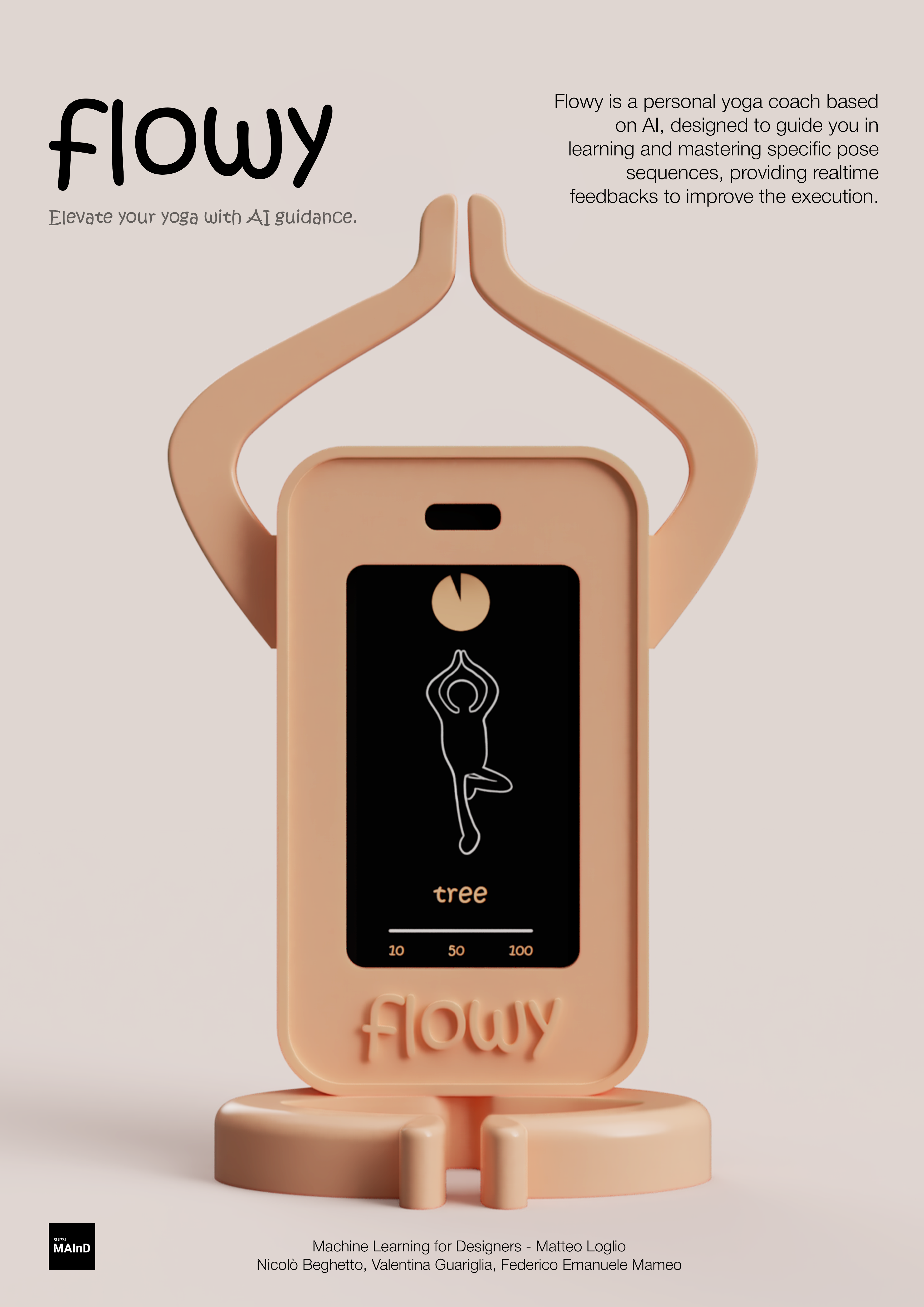

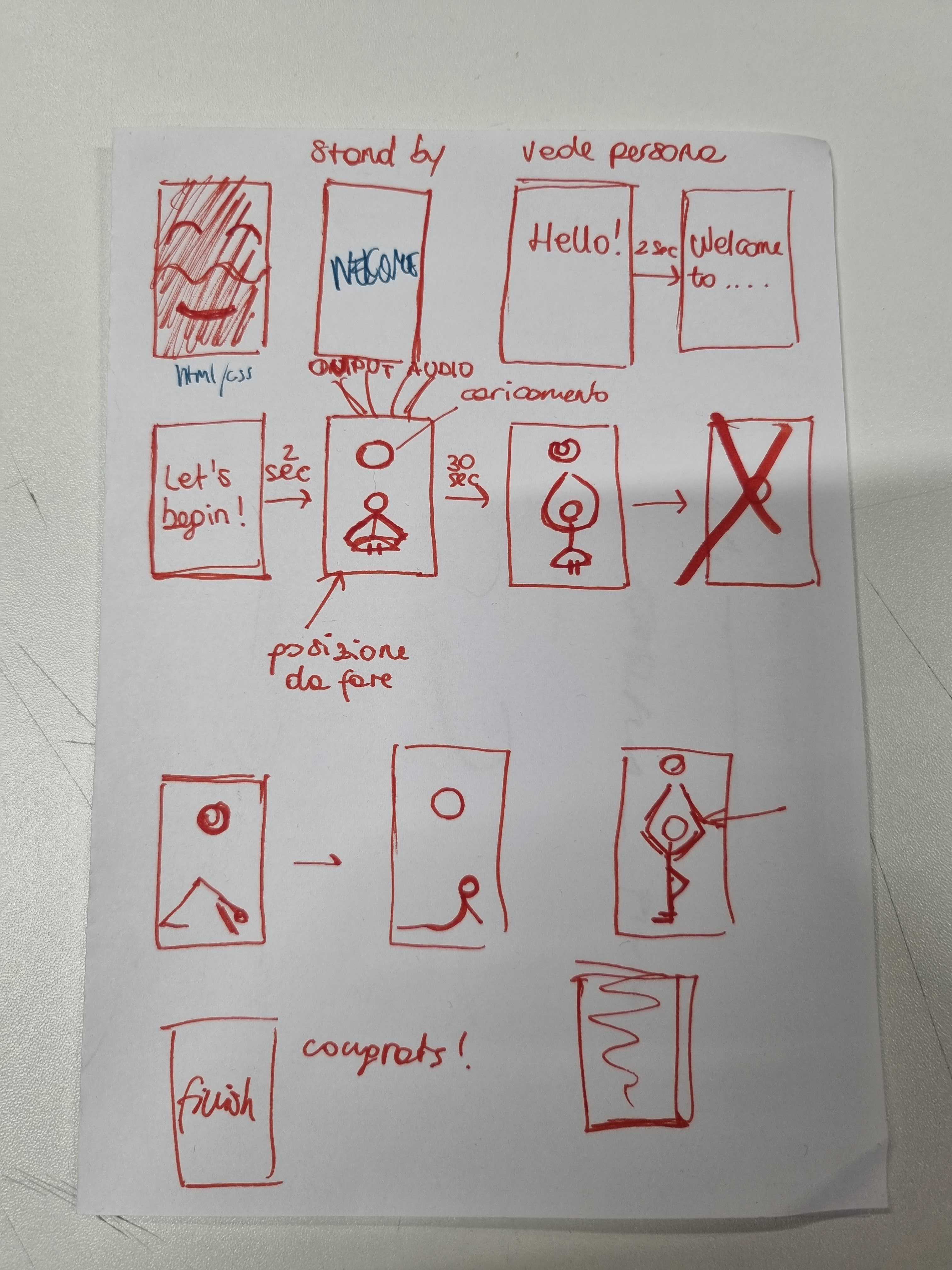

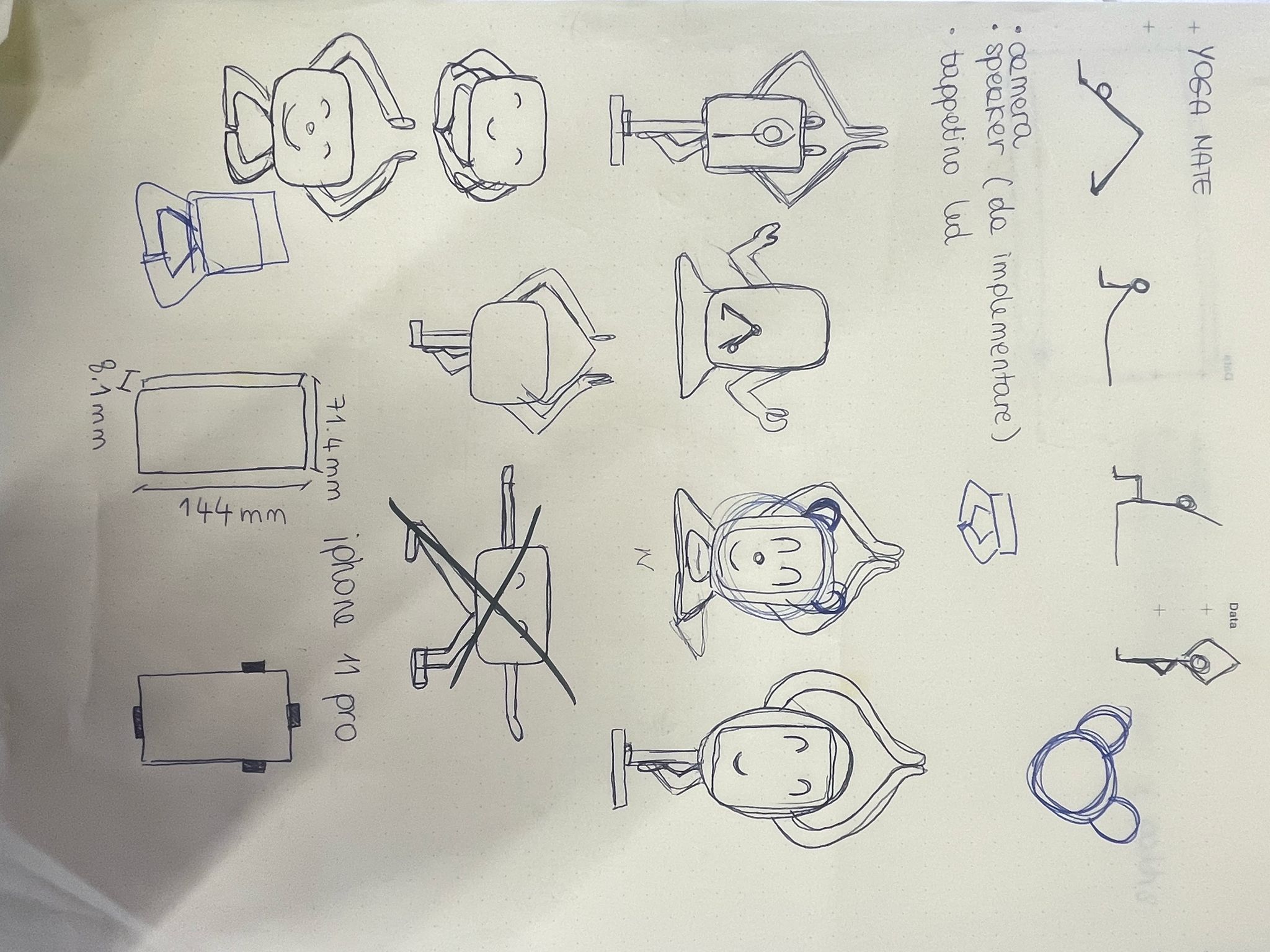

flowy is an AI-powered yoga instructor that enhances your yoga practice by analyzing your poses in real time and providing personalized suggestions for improvement. For each pose, it assigns a score based on the accuracy of your execution, using three precision levels (10, 50 or 100) which represent the percentage of pose accuracy.